RNN(Recurrent Neural Network)

Recurrent Neural Networks (RNNs) are a type of neural network that is able to process sequential data, such as time series or natural language. They are particularly useful for tasks that involve understanding context or maintaining an internal state, such as language translation or speech recognition.

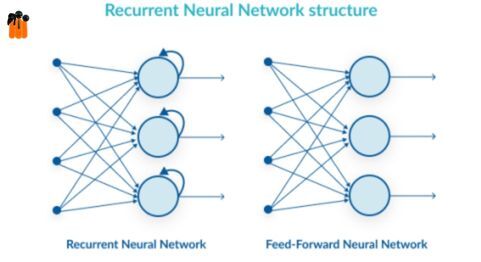

RNNs are called “recurrent” because they perform the same operation on every element of a sequence, with the output of one element being fed back into the network as input for the next element. This feedback loop allows the network to maintain an internal state, which can be used to process and understand the input sequence.

The basic building block of an RNN is the recurrent neuron, which takes in an input, a previous hidden state, and a weight matrix, and produces an output and a new hidden state. The input, output, and hidden state are all vectors, and the weight matrix is used to transform the input and previous hidden state into the new hidden state. The output is then typically produced by applying a nonlinear activation function to the new hidden state.

For Free, Demo classes Call: 7507414653

Registration Link: Click Here!

One of the main challenges with RNNs is that they can be difficult to train due to the vanishing gradient problem. This occurs because the gradients of the weights in the network can become very small as they are propagated back through the network, making it difficult for the network to learn.

If you’re looking to delve into the exciting field of deep learning and want to learn from the best, then look no further than SevenMentor’s Deep Learning course in Pune!

Pune is known for being a hub of education and technology, and these classes are no exception. Whether you’re a complete beginner or an experienced programmer, our courses are designed to cater to all levels and will help you gain the necessary skills to excel in the field of deep learning.

One solution to this problem is to use a variant of RNN called the Long Short-Term Memory (LSTM) network, which uses gating mechanisms to control the flow of information through the network and prevent the vanishing gradient problem. LSTMs have been used to achieve state-of-the-art

performance on tasks such as language translation and speech recognition.

Another variant of RNN is Gated Recurrent Unit (GRU) which is similar to LSTM but has fewer parameters, which makes it faster to train and less prone to overfitting.

For Free, Demo classes Call: 7507414653

Registration Link: Click Here!

RNNs can also be stacked to form deeper architectures, such as stacked LSTMs or bidirectional RNNs, which can further improve performance on certain tasks.

RNNs have a wide range of applications, including natural language processing, speech recognition, time series forecasting, and image captioning. In natural language processing, RNNs are used to process sequential data such as text and can be used for tasks such as language translation, text summarization, and sentiment analysis. In speech recognition, RNNs are used to process audio data and can be used for tasks such as speech-to-text and speaker identification. Are you looking to master the fascinating and rapidly growing field of deep learning, then Deep Learning Training in Pune is the perfect choice for you.

In time series forecasting, RNNs can be used to predict future values of a time series based on past values. This can be useful in fields such as finance, where it is important to predict stock prices or currency exchange rates.

In image captioning, RNNs can be used to generate captions for images based on the objects and scenes present in the image.

Overall, RNNs are a powerful type of neural network that is well-suited for processing sequential data and understanding context. They have achieved state-of-the-art performance on a wide range of tasks and have many potential applications in fields such as natural language processing, speech recognition, and time series forecasting.

One of the most important aspects of RNNs is the ability to remember information over a longer period of time. Traditional neural networks, such as feedforward networks, process input sequences

as a whole, without keeping track of any information from previous inputs. RNNs, on the other hand, has an internal memory that allows them to remember previous inputs, which is crucial for understanding sequential data. This ability to remember previous inputs is also known as context information, which is often vital for many real-world applications.

Another important aspect of RNNs is the ability to process variable-length sequences. This is in contrast to traditional feedforward networks, which require the input sequences to have a fixed length. This is a significant advantage as it allows RNNs to process input sequences of varying lengths, which is common in many real-world applications such as text, speech, and video processing.

RNNs can also be used for generative tasks, such as text generation and music composition. In these tasks, the network is trained on a dataset of input sequences, and then generates new sequences that are similar to the training data. This is achieved by sampling from the distribution of the learned features, which allows the network to generate new and unique sequences.

RNNs can also be used in conjunction with other types of neural networks, such as convolutional neural networks (CNNs) and fully connected networks (FCNs) to form hybrid architectures, known as CNN-RNN and FCN-RNN. These hybrid architectures can effectively combine the strengths of both types of networks to improve performance on various tasks such as image captioning, video classification, and speech recognition.

For Free, Demo classes Call: 7507414653

Registration Link: Click Here!

In conclusion, Recurrent Neural Networks (RNNs) are a powerful type of neural network that is well-suited for processing sequential data and understanding context. They have an internal memory that allows them to remember previous inputs, which is crucial for understanding sequential data, and the ability to process variable-length sequences, and can be used for generative tasks and in conjunction with other types of neural networks to form hybrid architectures. RNNs have achieved state-of-the-art performance on a wide range of tasks and have many potential applications in fields such as natural language processing, speech recognition, and time series forecasting.

Thanks for reading…

Author:

Call the Trainer and Book your free demo Class For Deep Learning Call now!!!

| SevenMentor Pvt Ltd.

© Copyright 2021 | Sevenmentor Pvt Ltd.