Data Splitting in Machine Learning Using Python

Effective data splitting is a crucial step towards building robust models in the vast landscape of machine learning. In this blog post, we will take a practical approach by using a real-world dataset and implementing Data Splitting in Machine Learning Using Python. Follow along as we explore the process step-by-step, ensuring you gain practical insights into the world of data splitting and its implementation.

For Free, Demo classes Call: 02071171500

Registration Link: Click Here!

Dataset Selection:

For our demonstration, we’ll use the famous Iris dataset, a classic dataset in machine learning that contains measurements of various features of iris flowers. This dataset is available on the popular Kaggle website, download it from the Kaggle website and load the dataset in the Python working directory, making it easy for us to focus on the implementation aspects.

![]()

Understanding the Dataset:

Before diving into data splitting, let’s briefly explore the Iris dataset. It comprises four features – sepal length, sepal width, petal length, and petal width – and a target variable indicating the species of iris (setosa, versicolor, or virginia).

It can be explored in Python by using the df.info() function and df.head() using the pandas library.

df.info()

The head() method in Pandas is used to return the first n rows of a DataFrame.

df.head()

Let’s delve into the details of training and testing datasets

For Free, Demo classes Call: 02071171500

Registration Link: Click Here!

Training Dataset:

The training dataset is a subset of the overall dataset that is used to train a machine learning model. When we talk about training a model, we mean that the algorithm learns patterns, relationships, and dependencies within the data to make predictions or classifications.

Testing Dataset:

The testing dataset, on the other hand, is a separate subset of the data that the model has not seen during the training phase. It acts as a completely independent dataset, simulating real-world scenarios where the model encounters new, unseen examples.

You could imagine slicing the single data set as follows:

Figure 1. Slicing a single data set into a training set and test set.

Make sure that your test set meets the following two conditions:

- Is large enough to yield statistically meaningful results.

- Is representative of the data set as a whole. In other words, don’t pick a test set with different characteristics than the training set.

Assuming that your test set meets the preceding two conditions, your goal is to create a model that generalizes well to new data. Our test set serves as a proxy for new data. For example, consider the following figure. Notice that the model learned for the training data is very simple. This model doesn’t do a perfect job—a few predictions are wrong. However, this model does about as well on the test data as it does on the training data. In other words, this simple model does not overfit the training data.

Never train on test data. If you are seeing surprisingly good results on your evaluation metrics, it might be a sign that you are accidentally training on the test set. For example, high accuracy might indicate that test data has leaked into the training set.

For Free, Demo classes Call: 02071171500

Registration Link: Python Training in Pune!

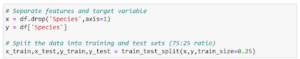

Now, let’s move on to the implementation of data splitting using Python. We’ll use the train_test_split function from scikit-learn to split the dataset into training and test sets.

In the code above, x_train and y_train represent the features and target variable of the Training Set, while x_test and y_test represent the Test Set. The test_size parameter specifies the proportion of the dataset to include in the test split, and random_state ensures reproducibility.

Validation Set:

Additionally, it’s good practice to create a validation set for fine-tuning model hyperparameters. We can achieve this by further splitting the training set.

![]()

Visit our channel to learn more: Click Here

Conclusion:

In this blog post, we’ve walked through the process of data splitting using a practical dataset, the Iris dataset, and implemented the splitting in Python. By leveraging the sci-kit-learn library, we effortlessly divided our dataset into training, validation, and test sets. This hands-on guide should empower you to implement data splitting in your machine learning projects, setting the stage for building models that generalize well to unseen data.

Author:

Mahesh Kankrale

Call the Trainer and Book your free demo Class For Python Call now!!!

| SevenMentor Pvt Ltd.

© Copyright 2021 | SevenMentor Pvt Ltd.