Forward and Backward Propagation in Deep Learning

In the vast sea of neural networks, Forward and Backward Propagation in Deep Learning are the guiding currents that drive the learning process. Let’s embark on a journey through these essential mechanisms, unraveling the intricacies of deep learning. Embark on a transformative journey into the depths of Deep Learning with Deep Learning classes in Pune at SevenMentor.

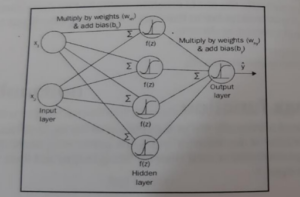

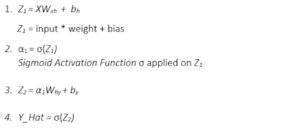

Forward Propagation

For Free, Demo classes Call: 7507414653

Registration Link: Click Here!

Backward Propagation

- Forward Propagation is based on random weights. If we use the random weights, cost/error/loss will be high.

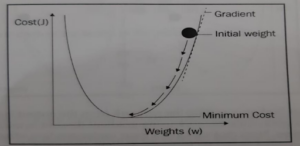

- In order to reduce this error/cost/loss, we need to optimize the random weights. There are two weights that we used so far (Wxh and Why)

- We can optimize these two weights by using the Gradient Decent technique.

- As a result of forward propagation, we are in the output layer. We will now backpropagate the network from the output layer to the input layer and calculate the gradient of the cost function with respect to all the weights between the output layer and the input layer.

For Free, Demo classes Call: 7507414653

Registration Link: Click Here!

Terminologies

- Forward Pass: implies the forward propagating from the input layer to the output layer.

- Backward Pass: implies the backpropagation from the output layer to the input layer.

- Epoch: specifies the number of times the neural network sees our whole training data. So, we can say that one epoch is equal to one forward pass and one backward pass for all the training samples.

- Batch Size: specifies the number of training samples we use in one forward pass and one backward pass. • Number of Iterations: implies the number of passes where one pass = one forward pass + one backward pass

Do visit our channel to learn more: Click Here

Say we have 12000 training samples and our batch size is 6000. It will take us two iterations to complete the one epoch. That is, in one iteration, we pass the first 6000 samples and perform the forward and backward pass; in the second iteration, we pass the next 6000 training samples and perform the forward and backward pass. After two iterations, our neural network will see the whole 12,000 raining samples, which makes it one epoch. Master neural networks, dive into advanced algorithms, and elevate your skills in the cutting-edge field of artificial intelligence. Enroll now in Deep Learning Training in Pune for a future-ready education!

Author:

Sagar Gade

Call the Trainer and Book your free demo Class For Deep Learning Call now!!!

| SevenMentor Pvt Ltd.

© Copyright 2021 | SevenMentor Pvt Ltd.