Top 150+ Data Science Interview Questions and Answers

Hey, this is Suraj. Welcome to the question-and-answer blog, Data science is a rapidly growing field that involves the use of statistical, mathematical, and computer science techniques to extract insights and knowledge from data We provide Top 150+ Data Science Interview Questions and Answers to your most asked questions in the interviews. In today’s fast-paced world, finding accurate and reliable information can be challenging. That’s why we’ve created this platform to help you get the answers you need.

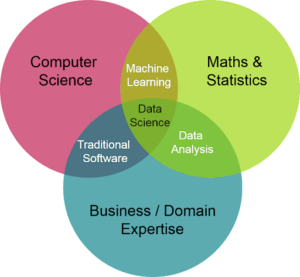

- What is data science, and what skills are required to be a successful data scientist?

Answer: Data science is the field of extracting insights and knowledge from data using various techniques and tools. Data science is an interdisciplinary field that involves the use of statistical and computational methods to extract insights and knowledge from data

The skills required to be a successful data scientist include a strong foundation in statistics, programming, data visualization, and machine learning, big data technologies. Additionally, strong communication skills are necessary to present findings and insights to both technical and non-technical stakeholders.

- Tell me the difference between supervised and unsupervised learning.

Answer:

Supervised learning is a type of machine learning where the ML algorithm is trained on labeled data. The labeled data consists of input features and corresponding output labels. The goal of the algorithm is to learn a mapping function between the input features and output labels.

Unsupervised learning where ML algorithm is trained on unlabelled data. The algorithm’s task in unsupervised learning is to find patterns or structures in the data without any prior knowledge of what the output should look like. In other words, the algorithm is not given any specific target to learn, and it has to discover the underlying patterns and relationships in the data by itself.

- What is the difference between data science and data analytics?

Answer: There Data science and data analytics are two related but distinct fields that involve working with data to extract insights and inform decision-making.

Data science is a broad field that encompasses a range of techniques and tools for extracting insights and knowledge from data. It involves the use of statistical modeling, machine learning, data visualization, and other techniques to explore and analyze data, build predictive models, and generate actionable insights.

Data analytics, on the other hand, is a narrower field that focuses on analyzing data to identify patterns and trends and make data-driven decisions. It involves the use of statistical and quantitative analysis techniques to extract insights and knowledge from data.

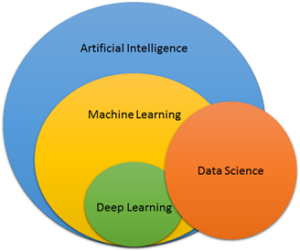

- What is the difference between machine learning, deep learning, and Artificial intelligence?

Answer: There Machine learning, deep learning, and artificial intelligence (AI) are related concepts but have distinct differences.

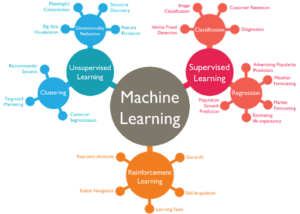

Machine learning is a subfield of AI that involves the development of algorithms and statistical models that enable computer systems to learn and improve from experience without being explicitly programmed. Machine learning algorithms is classified into 1) supervised learning, 2) unsupervised learning 3) reinforcement learning. These algorithms are used to train models to make predictions or classifications based on input data.

Deep learning is a subset of machine learning that involves the use of neural networks to learn from large and complex datasets. Neural networks are composed of multiple layers of interconnected nodes that can learn to recognize patterns and features in the data. Deep learning algorithms have been used to achieve state-of-the-art performance in image recognition, speech recognition, and natural language processing.

Artificial intelligence refers to the broader field of developing intelligent computer systems that can perform tasks that typically require human-level intelligence, such as reasoning, perception, and decision-making. AI encompasses a wide range of techniques and approaches, including machine learning and deep learning, as well as rule-based systems, expert systems, and knowledge representation.

- What is the difference between business analytics and data analytics

Answer: Business analytics and data analytics are two related but distinct fields that involve working with data to extract insights and inform decision-making in a business context.

Data analytics is a broad field that involves the use of statistical and quantitative analysis techniques to extract insights and knowledge from data. It includes tasks such as data cleaning, data visualization, exploratory data analysis, and modeling.

Business analytics, on the other hand, is a more focused field that involves the use of data analytics techniques to solve specific business problems or inform business decisions. It encompasses a range of techniques, including data mining, predictive analytics, and optimization, that are used to gain insights into business operations, customer behavior, and market trends.

- How do you handle missing data in a dataset?

Answer: There are several methods to handle missing data, including removing the rows with missing data, imputing missing data with a statistical method like mean, median, and mode, or using a machine learning algorithm like KNN, Simple Imputer

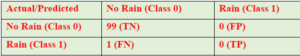

- What is the confusion Matrix and What is the difference between precision and recall?

Answer: A confusion matrix is a tool used in machine learning and statistical classification analysis to evaluate the performance of a classification model by comparing predicted class labels with actual class labels. A confusion matrix means an error matrix and it’s always a square matrix with two dimensions.

Precision is the proportion of true positive predictions out of all positive predictions, while recall is the proportion of true positive predictions out of all actual positive instances.

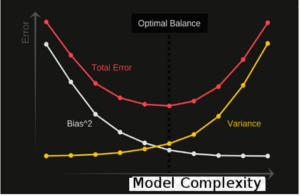

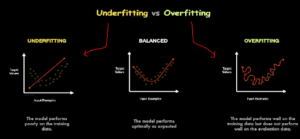

- Can you explain the bias-variance trade-off?

Answer: The bias-variance trade-off is the balancing act between overfitting and underfitting a model. A model with high bias will underfit the data and have poor accuracy, while a model with high variance will overfit the data and perform well on the training set but poorly on the test set.

- What is regularization, and why is it useful in machine learning?

Answer: Regularization is a technique used to prevent overfitting by adding a penalty term to the cost function that penalizes large weights in a model. Regularization is one of the main techniques to handle overfitting in machine learning and helps to improve the generalization performance of ML models. There are two common types of regularization: L1 and L2 regularization. L1 regularization, also known as Lasso regularization, adds a penalty term equal to the sum of the absolute values of the model’s coefficients. L2 regularization, also known as Ridge regularization, adds a penalty term equal to the sum of the squares of the model’s coefficients. Both L1 and L2 regularization encourage the model to have smaller coefficients, which can help to reduce overfitting.

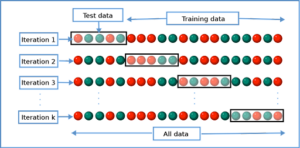

- Can you explain cross-validation?

Answer: Cross-validation is a technique used to evaluate a model’s performance on new data by partitioning the available data into training and testing sets. This process is repeated multiple times, with different partitions used for training and testing, to obtain a more accurate estimate of the model’s performance.

- What are the different types of cross-validation techniques?

Answer: Some common types of cross-validation techniques include:

- k-fold cross-validation: In k-fold cross-validation, the data is divided into k subsets of approximately equal size. The model is then trained on k-1 of these subsets and validated on the remaining subset. This process is repeated k times.

- Leave-one-out cross-validation: In leave-one-out cross-validation, each data point is used as a validation set once, and the model is trained on the remaining data. This is a particularly useful technique for small datasets.

- Stratified cross-validation: Stratified cross-validation is used when the dataset is imbalanced, i.e., when there are unequal numbers of samples in each class. In this technique, the data is divided into folds such that the class distribution is approximately the same in each fold.

- What is the difference between a parametric and a non-parametric model?

Answer: Parametric models make assumptions about the underlying distribution of the data, while non-parametric models do not make any assumptions. Parametric models are generally more efficient but less flexible than non-parametric models.

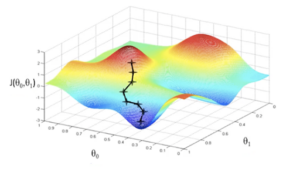

- Can you explain gradient descent?

Answer: Gradient descent is an optimization algorithm used to find the minimum of a cost function. The algorithm starts with an initial guess for the model parameters and iteratively updates the parameters in the direction of the negative gradient of the cost function until it reaches a minimum.

- How would you approach a data science problem?

Answer: A common approach to a data science problem is to start by understanding the business problem and defining the problem statement. Next, explore the data and perform any necessary data cleaning and feature engineering. Then, select an appropriate model and train it on the data. Finally, evaluate the model’s performance and interpret the results.

For Free, Demo classes Call: 020-71173143

Registration Link: Click Here!

- What is the Central Limit Theorem, and why is it important in statistics?

Answer: The Central Limit Theorem states that the distribution of sample means approximates a normal distribution, regardless of the distribution of the underlying population, as the sample size increases. This is important because it allows us to use statistical inference to make inferences about the population based on a sample.

- What is the curse of dimensionality, and how does it affect machine learning models?

Answer: The curse of dimensionality refers to the problem of having a large number of features or variables in a dataset, which can lead to overfitting and poor performance of machine learning models. This is because as the number of dimensions increases, the number of data points required to avoid overfitting also increases exponentially.

- What is overfitting, and how can it be prevented?

Answer: Overfitting occurs when a model is too complex and fits the noise in the data, rather than the underlying patterns. Overfitting can be prevented by using regularization techniques, such as L1 or L2 regularization, or by using a simpler model that has fewer parameters.

- What is the difference between a classification and a regression problem?

Answer: Classification and regression are both types of machine learning problems, but they differ in their goals and output. When performing classification, the objective is to make a prediction about a categorical variable, such as determining whether an email qualifies as spam or not. In regression, the goal is to predict a continuous variable, such as the price of a house or the age of a person.

- What are some common applications of regression in machine learning?

Answer: Regression is a supervised learning technique in machine learning that is used to predict a continuous value output based on input features. Some common applications of regression in machine learning include:

- Sales forecasting: Regression can be used to forecast future sales based on historical sales data, market trends, and other variables.

- Financial analysis: Regression can be used to predict stock prices, interest rates, and other financial variables based on historical data and other factors.

- Medical diagnosis: Regression can be used to predict the risk of developing a disease based on factors such as age, gender, lifestyle, and medical history.

- Marketing analysis: Regression can be used to predict customer behavior and preferences based on demographic data, purchase history, and other variables.

- Quality control: Regression can be used to predict the quality of a product based on manufacturing variables such as temperature, pressure, and time.

- Environmental Modeling: Regression can be used to predict air and water pollution levels based on weather patterns, emissions data, and other variables.

- Energy consumption: Regression can be used to predict energy consumption based on historical usage data, weather patterns, and other variables.

- What are some common applications of classification in machine learning?

Answer: Classification is a supervised learning technique in machine learning that is used to predict the class or category of a given input based on input features. Some common applications of classification in machine learning include:

- Spam detection: Classification can be used to classify emails as spam or not spam based on the contents of the email.

- Fraud detection: Classification can be used to detect fraudulent transactions in banking and finance based on patterns in the data.

- Medical diagnosis: Classification can be used to predict whether a patient has a particular disease or not based on symptoms, medical history, and other factors.

- Sentiment analysis: Classification can be used to analyze social media posts and classify them as positive, negative, or neutral based on the content of the post.

- Image recognition: Classification can be used to recognize objects in images and classify them into different categories such as animals, vehicles, and people.

- Customer segmentation: Classification can be used to segment customers into different groups based on demographic data, purchasing history, and other variables.

- Credit risk analysis: Classification can be used to predict the creditworthiness of a borrower based on financial history, employment status, and other factors.

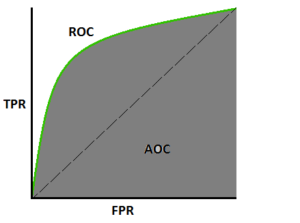

- Can you explain the ROC curve and AUC metric?

Answer: The ROC curve is a graphical representation of the relationship between the true positive rate and the false positive rate across multiple classification thresholds. The AUC metric is the area under the ROC curve and provides a measure of the model’s ability to distinguish between positive and negative instances.

- What is the difference between a parametric and a non-parametric hypothesis test?

Answer: A parametric hypothesis test makes assumptions about the underlying distribution of the data, while a non-parametric hypothesis test does not make any assumptions. Parametric tests are generally more powerful but less robust than non-parametric tests.

- What is the difference between bagging and boosting?

Answer: Bagging involves training multiple models on different subsets of the data and combining their predictions to reduce variance while boosting involves iteratively training models on the misclassified instances to reduce bias.

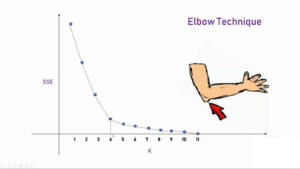

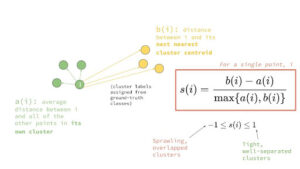

- How do you select the optimal number of clusters in a clustering algorithm?

Answer: The optimal number of clusters can be determined using various methods, such as the elbow method, silhouette score, or gap statistic.

- What is the difference between probability and likelihood?

Answer: Probability is the measure of the likelihood of an event occurring, given some known parameters, while likelihood is the measure of the likelihood of observing a set of parameters, given some known data.

- What is the difference between a bias and a variance in a machine-learning model?

Answer: Bias is the error that occurs when a model is too simple and underfits the data, while variance is the error that occurs when a model is too complex and overfits the data. The goal is to balance bias and variance to achieve the best performance.

- What is feature engineering, and why is it important in machine learning?

Answer: Feature engineering is the process of selecting and transforming raw data features to improve the performance of machine learning models. It is important because the quality of the features often has a greater impact on model performance than the choice of algorithm.

- Explain precision and recall, and how they are related to the confusion matrix.

Answer: Precision is the ratio of true positive predictions to the total number of positive predictions, while recall is the ratio of true positive predictions to the total number of actual positive instances. Precision and recall are related to the confusion matrix because they are computed from the counts of true/false positives and negatives in the matrix.

- What is the difference between a decision tree and a random forest?

Answer: A decision tree is a single tree-based model that uses a set of rules to make decisions, while a random forest is an ensemble of decision trees that combines the predictions of multiple trees to reduce variance and improve performance.

- What is the curse of big data, and how does it affect data analysis?

Answer: The curse of big data refers to the challenges of processing and analyzing large volumes of data, including issues such as storage, computation, and privacy. It affects data analysis by requiring new tools and techniques to process and analyze large datasets.

- What is cross-validation, and why is it important in machine learning?

Answer: Cross-validation is the process of dividing the dataset into multiple folds and training the model on each fold while testing on the remaining folds. It is important in machine learning because it helps to estimate the performance of the model on unseen data and avoid overfitting.

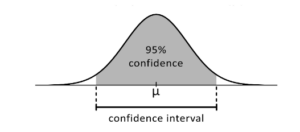

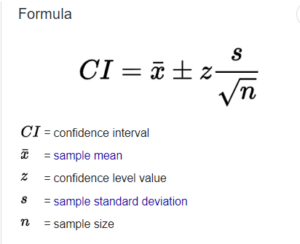

- What is the difference between a p-value and a confidence interval?

Answer: A p-value is a measure of the evidence against the null hypothesis, while a confidence interval is a range of values that is likely to contain the true population parameter with a certain level of confidence. A p-value is often used for hypothesis testing, while a confidence interval is used for parameter estimation.

- Can you explain the difference between a one-tailed and a two-tailed test?

Answer: In a one-tailed test, the null hypothesis is rejected if the observed result falls entirely in one direction of the distribution, while in a two-tailed test, the null hypothesis is rejected if the observed result falls outside a certain range in both directions of the distribution. A one-tailed test is often used when there is a specific direction of interest, while a two-tailed test is used when the direction is not known a priori.

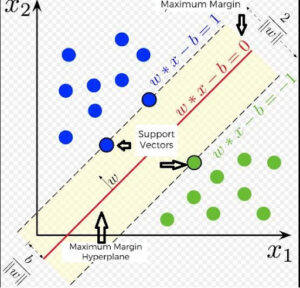

- What is the difference between a decision boundary and a hyperplane?

Answer: A decision boundary is a line or surface that separates the different classes in a classification problem, while a hyperplane is a higher-dimensional equivalent of a line that separates the data points in a linearly separable problem. In binary classification problems, the decision boundary is a line, while in multi-class problems, it is a surface.

- Can you explain the difference between type I error and type II error in statistics?

Answer: A type I error is a false positive error, where the null hypothesis is rejected even though it is true. A type II error is a false negative error, where the null hypothesis is not rejected even though it is false. The probability of type I error is denoted by alpha, while the probability of type II error is denoted by beta.

- Can you explain the difference between a mean and a median?

Answer: The mean is the average of a set of values, while the median is the middle value in a set of values when they are arranged in order. The mean is very sensitive to outliers present in the dataset and the median is more robust to outliers in the data.

- What is the difference between overfitting and underfitting?

Answer: Overfitting happens when a model is too complex and it fits the training data too well so resulting in poor generalization to new data or testing data. Underfitting happens when a model is too simple and not able to find underlying patterns in the data and that results in poor performance on both the training and testing data.

- Can you explain the difference between gradient descent and stochastic gradient descent?

Answer: Gradient descent is an optimization algorithm that is used to minimize a cost function by iteratively adjusting the model parameters in the direction of the steepest descent. Stochastic gradient descent is a variant of gradient descent that randomly samples a subset of the data at each iteration to reduce the computational cost and increase the convergence speed.

- Can you explain the difference between a continuous and a categorical variable?

Answer: A continuous variable is a variable that can take any value within a range, such as age or height. A categorical variable is a variable that can take a limited number of discrete values, such as gender or color.

For Free, Demo classes Call: 020-71173143

Registration Link: Click Here!

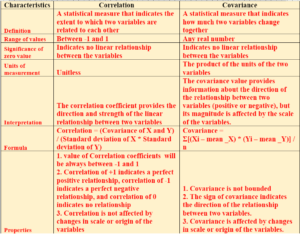

- Can you explain the difference between a correlation and a causation?

Answer: Correlation is a statistical measure that quantifies the strength and direction of the relationship between two variables, while causation refers to a causal relationship where one variable directly causes a change in another variable. Correlation does not imply causation, and establishing a causal relationship requires additional evidence and experimentation.

- Can you explain the difference between a parametric and a non-parametric model?

Answer: A parametric model is a model that makes assumptions about the underlying distribution of the data and uses the parameters of the distribution to make predictions. A non-parametric model is a model that does not make any assumptions about the distribution and uses flexible, data-driven algorithms to make predictions.

- What is a recommender system?

Answer: A recommender system is a type of machine learning model that is used to recommend items to users based on their past behavior or preferences. Recommender systems are commonly used in e-commerce, social media, and other domains where there are large amounts of data on user behavior and item characteristics. There are several types of recommender systems, including content-based, collaborative filtering, and hybrid recommender systems.

- What is feature selection?

Answer: Feature selection is the process of selecting a subset of the available features (or variables) in a dataset that is most relevant for a particular task, such as prediction or classification. Feature selection can improve the performance of machine learning models by reducing overfitting and improving interpretability.

- What is data cleaning in machine learning?

Answer: Data cleaning is the process of identifying and correcting or removing errors, inconsistencies, and inaccuracies in a dataset. The goal is to prepare the data for analysis and modeling by ensuring that it is accurate, complete, and consistent.

- Why is data cleaning important in machine learning?

Answer: Data cleaning is important in machine learning because the accuracy and effectiveness of the models depend on the quality of the data. If the data is dirty, it can lead to inaccurate or biased results, and can even cause the model to fail completely. Cleaning the data can help to improve the quality of the results and ensure that the model is working as intended.

- What are some common types of data-cleaning tasks?

Answer: Some common types of data cleaning tasks include removing duplicates, filling in missing values, correcting formatting errors, removing outliers, and resolving inconsistencies or contradictions.

- What are some techniques for removing duplicates in a dataset?

Answer: Some techniques for removing duplicates in a dataset include using the drop_duplicates() function in pandas, using the unique() function to identify unique values, and using hashing algorithms to identify duplicates based on their content.

- What is EDA (Exploratory data analysis)?

Answer: EDA stands for exploratory data analysis and it is the process of examining and analyzing the data to understand its structure, patterns, and relationships between variables. EDA is an important step in the machine learning process as it helps to identify potential issues with the data and can inform feature engineering and selection.

This image may not be related to the above questions answer.

This is a reminder when you are dealing with data.

You can do it ! 💪

- What are some common techniques used in EDA?

Answer: Some common techniques used in EDA include data visualization, descriptive statistics, correlation analysis, and feature engineering. Data visualization techniques, such as histograms, scatter plots, and box plots, can help to identify the distribution of the data and potential outliers. Descriptive statistics, such as mean, median, and standard deviation, can provide additional insights into the data. Correlation analysis can help to identify relationships between variables, while feature engineering involves transforming and creating new features based on the insights gained from the EDA.

- What are some techniques for filling in missing values in a dataset?

Answer: Some techniques for filling in missing values in a dataset include using the mean or median value of the feature, using forward or backward filling to fill in missing values with the previous or next value, and using machine learning models to predict missing values based on other features.

- How do you ensure that data remains clean and accurate over time?

Answer: To ensure that data remains clean and accurate over time, it is important to establish data governance processes and standards, implement data validation and verification procedures, and monitor data quality on an ongoing basis. Regular data audits and reviews can also help to identify and correct any issues that arise.

- What is encoding in machine learning?

Answer: Encoding is the process of transforming categorical data into a numerical representation that can be used in machine learning algorithms.

- Why is encoding necessary in machine learning?

Answer: Machine learning algorithms require numerical data, so encoding is necessary to convert categorical data into a numerical format that can be used in the algorithms.

- What are some common methods for encoding categorical data?

Answer: Some common methods for encoding categorical data include one-hot encoding, label encoding, and binary encoding.

- What is one-hot encoding?

Answer: One-hot encoding is a method of encoding categorical data where each category is converted into a binary vector where only one element is 1 and the rest are 0s. This allows the machine learning algorithm to interpret each category as a separate feature.

- What is label encoding?

Answer: Label encoding is a method of encoding categorical data where each category is assigned a numerical label. This method is useful when the categories have a natural ordering, such as low, medium, and high.

- What is ordinal encoding?

Answer: Ordinal encoding is a method of encoding categorical data where each category is assigned a numerical value based on its rank or order. This method is useful when the categories have a natural ordering, such as grades or levels

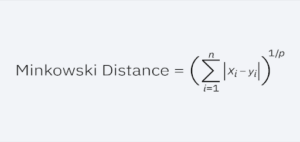

- Why is scaling important in machine learning?

Answer: Scaling is important in machine learning because many algorithms are sensitive to the scale and distribution of the input features. For example, distance-based algorithms such as k-nearest neighbors (KNN) and support vector machines (SVM) can be affected by the magnitude of the features, while gradient descent-based algorithms such as logistic regression and neural networks can benefit from scaling to improve convergence and avoid numerical instability.

- What are some common methods for scaling data?

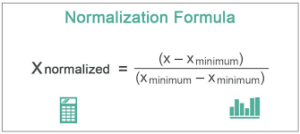

Answer: Some common methods for scaling data include normalization, standardization, and min-max scaling. Normalization scales the features to have a unit norm, while standardization scales the features you will get a mean zero and standard deviation one. Min-max scaling scales the features to a specified range, typically between 0 and 1.

- How do you handle outliers in machine learning?

Answer: Outliers can be handled in several ways in machine learning, depending on the nature and extent of the outliers. One approach is to remove the outliers from the dataset, either by manual inspection or by using statistical methods such as the interquartile range (IQR) or z-score. Another approach is to use robust methods that are less sensitive to outliers, such as median-based estimators or the Huber loss function.

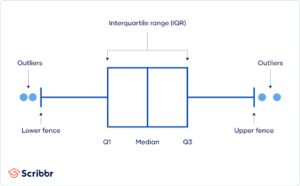

- What is the interquartile range (IQR) method for outlier detection?

Answer: The interquartile range (IQR) method is a statistical method for detecting outliers based on the distribution of the data. It involves calculating the IQR, which is the difference between the 75th and 25th percentiles of the data, and using it to define a threshold for outliers. Any data points outside the range of 1.5 times the IQR below the 25th percentile or above the 75th percentile is considered outliers.

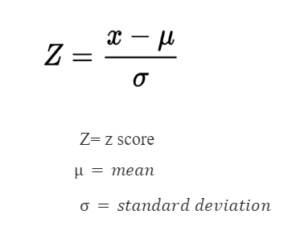

- What is the z-score method for outlier detection?

Answer: The z-score method is a statistical method for detecting outliers based on the standard deviation of the data. It involves calculating the z-score for each data point, which is the number of standard deviations away from the mean, and using it to define a threshold for outliers. Any data points with a z-score above a certain threshold, typically 2 or 3, are considered outliers.

- How can you use clustering for outlier detection?

Answer: Clustering can be used for outlier detection by identifying data points that are far from the clusters or do not belong to any cluster. One approach is to use density-based clustering algorithms such as DBSCAN or OPTICS to identify dense regions of the data and label the data points that are not part of any cluster as outliers. Another approach is to use distance-based clustering algorithms such as k-means or hierarchical clustering to identify the data points that are farthest from the clusters as outliers.

- How can you use anomaly detection for outlier detection?

Answer: Anomaly detection is a machine learning technique that is specifically designed for detecting outliers or anomalies in data. It involves training a model on normal data and using it to identify data points that are significantly different or unusual. Some common approaches to anomaly detection include isolation forest, one-class SVM, and autoencoders.

- What is normalization in machine learning?

Answer: Normalization is a technique for scaling the input features in machine learning such that they have a common scale or range. It involves transforming the features so that they have zero mean and unit variance or a unit norm. Some techniques for feature scaling include normalization, which scales the features to a range of 0 to 1

- What is standardization in machine learning?

Answer: Standardization is a type of normalization in machine learning that involves scaling the input features to have zero mean and unit variance. It involves subtracting the mean of each feature and dividing by its standard deviation., which scales the features to have a mean of 0 and a standard deviation of 1. and the z-score technique is used for standardization.

.

- When should you use normalization versus standardization?

Answer: Normalization and standardization can be used for different purposes and in different situations. Normalization is useful for algorithms that rely on distance or similarity measures and can be used when the magnitude of the features is not important. Standardization is useful for gradient-based optimization algorithms and can be used when the scale and distribution of the features are important.

- Can you normalize or standardize categorical features?

Answer: Normalization and standardization are typically applied to continuous or numerical features. Categorical features are typically encoded using techniques such as one-hot encoding, label encoding, or binary encoding.

- What is imbalanced data and how to handle it?

Answer: Imbalanced data refers to a situation in which the distribution of classes in a dataset is not equal. That is, one class has a significantly higher number of instances than the other classes.

In various real-world situations, such as fraud detection or medical diagnosis, the minority class carries crucial importance, making imbalanced data a common issue.

To handle imbalanced data, several techniques can be employed:

- Resampling: You can do oversampling the minority class or also under-sampling the majority class to balance the dataset. This can be done using techniques such as random oversampling, random under-sampling, or SMOTE (Synthetic Minority Over-sampling Technique).

- Ensemble methods: Ensemble methods, such as bagging, boosting, and stacking, can be used to combine multiple models to improve performance on imbalanced data.

- Algorithm selection: Some machine learning algorithms, such as decision trees and random forests, are naturally better suited to handling imbalanced data. Choosing an appropriate algorithm can help improve performance.

- Data augmentation: Data augmentation techniques, such as rotation, scaling, and flipping, can be used to generate new examples of the minority class, which can help improve the performance of the model.

It is important to choose the appropriate technique for handling imbalanced data based on the specific characteristics of the dataset and the problem at hand

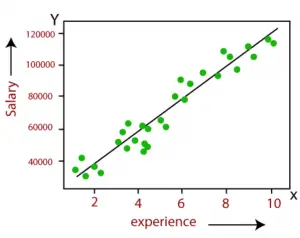

- What is linear regression?

Answer: Linear regression is a statistical method that finds the relationship between a dependent variable and independent variables. It assumes that there is a linear relationship between the variables and seeks to find the best-fitting line that represents this relationship.

- What are the assumptions of linear regression?

Answer: The assumptions of linear regression include linearity, independence, homoscedasticity (equal variance), normality, and absence of multicollinearity. These assumptions are important to ensure that the estimates obtained from the regression analysis are valid.

- How do you measure the goodness of fit in linear regression?

Answer: The goodness of fit in linear regression is typically measured using the coefficient of determination (R-squared) or the root mean squared error (RMSE). R-squared measures the proportion of variance in the dependent variable that is explained by the independent variables, while RMSE measures the average deviation of the predicted values from the actual values.

- How do you handle multicollinearity in linear regression?

Answer: Multicollinearity occurs when there is a high correlation between two or more independent variables. This can cause issues with the interpretation of the coefficients and lead to unstable estimates. To handle multicollinearity, one approach is to remove one of the correlated variables from the model. Another approach is to use regularization techniques, such as ridge regression or lasso regression, which can help to reduce the impact of multicollinearity on the estimates.

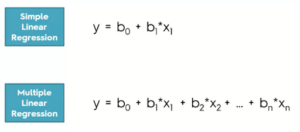

- What is the difference between simple linear regression and multiple linear regression?

Answer: The technique of simple linear regression involves creating a linear model that estimates the relationship between a single independent variable and a dependent variable. Multiple linear regression involves modeling the relationship between a dependent variable and multiple independent variables. Multiple linear regression allows for the modeling of more complex relationships between variables, but can also increase the risk of overfitting if too many variables are included in the model.

- What are some common performance metrics for regression problems in ML?

Answer: Some common performance metrics for regression problems include:

- Mean Squared Error (MSE): Mean Squared Error (MSE) is a metric that calculates the average of the squared differences between the predicted and actual values in a dataset. It gives more weight to larger errors, making it sensitive to outliers.

- Root Mean Squared Error (RMSE): It’s a square root of MSE. It is often used to compare models because it has the same units as the dependent variable.

- Mean Absolute Error (MAE): MAE measures the average absolute difference between the predicted and actual values. MAE is a preferred choice over MSE when there are outliers in the dataset because it gives equal weight to all errors, whereas MSE can be significantly influenced by the presence of outliers.

- R-squared (R²): R-squared (R²): is a popular metric in regression analysis that quantifies how much of the variation in the dependent variable can be explained by the independent variables. R-squared (R²) is a metric used to evaluate the goodness of fit of a regression model. It can take values between 0 and 1, where higher values indicate a better fit between the model and the data.

For Free, Demo classes Call: 020-71173143

Registration Link: Click Here!

- What are some common performance metrics for classification problems in ML?

Answer:

- Accuracy: The proportion of correctly classified samples out of the total number of samples.

- Precision: The proportion of true positive classifications out of all positive classifications.

- Recall (Sensitivity or True Positive Rate): The proportion of true positive classifications out of all actual positives.

- F1 score: A weighted average of precision and recall that ranges from 0 to 1, with higher values indicating better performance.

- ROC curve: A plot of the true positive rate against the false positive rate at different classification thresholds.

- AUC (Area Under the ROC Curve): A measure of the area under the ROC curve, which ranges from 0 to 1 and indicates the overall performance of the classifier.

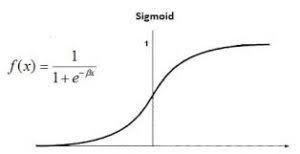

- What is logistic regression and how is it used in machine learning?

Answer: Logistic regression is a statistical method used for binary classification problems where the response variable is categorical with two possible outcomes (e.g., yes/no, true/false). Logistic regression finds the best fitting model so that it will describes the relationship between the predictor variables (input variable) and the binary response variable(target variable).

In logistic regression, the response variable is transformed using a sigmoid function that maps the input to a probability between 0 and 1. The output of the model is interpreted as the probability of the positive class, and a decision threshold is used to classify new samples into the positive or negative class.

Logistic regression can be used in various applications, such as predicting customer churn, detecting fraudulent transactions, or diagnosing diseases based on medical data.

- What are hyperparameters in machine learning?

Answer: Hyperparameters are parameters that are set prior to training a model and control its behavior during training. They are not learned from data and typically require manual tuning or optimization to achieve the best performance. Examples of hyperparameters include the learning rate, regularization strength, number of hidden layers and neurons, batch size, and activation functions.

- Why is hyperparameter tuning important in machine learning?

Answer: Hyperparameter tuning is important because it can significantly impact the performance of a machine-learning model. Choosing the wrong hyperparameters can lead to overfitting, underfitting, or poor generalization, which can result in inaccurate predictions and wasted resources. By tuning the hyperparameters, we can find the optimal settings that result in the best performance on the validation or test set.

- What are some methods for hyperparameter tuning?

Answer: There are several methods for hyperparameter tuning, including:

- Grid search: Grid search is a hyperparameter tuning technique that involves defining a set of values for each hyperparameter and systematically evaluating all possible combinations of these values to determine the best configuration for the model.

- Random Search: This involves randomly sampling hyperparameter values from predefined distributions to explore the hyperparameter space more efficiently.

- Bayesian optimization: This involves using a probabilistic model to predict the performance of different hyperparameter settings and selecting the most promising ones to evaluate.

- Genetic algorithms: This involves using evolutionary algorithms to search the hyperparameter space by generating and evaluating candidate solutions based on their fitness.

- How can we avoid overfitting during hyperparameter tuning?

Answer: Overfitting can occur during hyperparameter tuning if we evaluate the performance of the model too many times on the same validation set, leading to over-optimistic estimates of the performance. To avoid overfitting, we can use techniques such as cross-validation or holdout validation, where we split the data into training, validation, and test sets, and only use the test set for the final evaluation. We can also use regularization techniques, such as dropout or weight decay, to prevent the model from overfitting to the training data.

- What is K-Nearest Neighbors (KNN) algorithm in machine learning?

Answer: KNN is a non-parametric and lazy learning algorithm that can be used for both classification and regression tasks. It works by finding the K closest data points in the training set to a new data point and using the majority class (for classification) or the average value (for regression) of those K neighbors to make predictions for the new data point.

- What are the advantages and disadvantages of the KNN algorithm?

Answer: Some advantages of the KNN algorithm are very simple algorithm and flexible. It also has effectiveness in handling non-linear data. However, some disadvantages include its sensitivity to irrelevant features, high computational cost at test time, and the need to choose an appropriate value of K.

- How do we choose the value of K in the KNN algorithm?

Answer: The choice of K in the KNN algorithm can significantly affect its performance. A small value of K may result in overfitting and a high variance, while a large value of K may result in underfitting and a high bias. One common approach is to use cross-validation to find the optimal value of K that results in the lowest error rate or highest accuracy on the validation set.

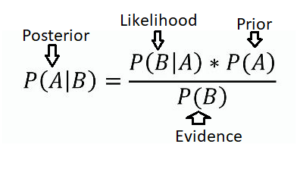

- What is the Naive Bayes algorithm in machine learning?

Answer: Naive Bayes is a probabilistic algorithm that is commonly used for classification tasks. It is based on Bayes’ theorem; The algorithm works by calculating the probability of a data point belonging to a certain class based on the probabilities of its features given that class. It then assigns the data point to the class with the highest probability.

- What are the assumptions of the Naive Bayes algorithm?

Answer: Naive Bayes algorithm makes the assumption of feature independence, meaning that it assumes that the features are conditionally independent given the class label. This assumption is often not true in practice, but Naive Bayes can still perform well if the features are only weakly correlated.

- Why naive Bayes algorithm is called naïve?

Answer: Naive Bayes algorithm is called “naive” because of its strong assumption of feature independence, which is often unrealistic in real-world data. The algorithm assumes that the features are conditionally independent given the class label, meaning that the presence or absence of one feature does not affect the probability of the other features. This assumption is naive because it ignores the correlations between the features, which may not be true in practice.

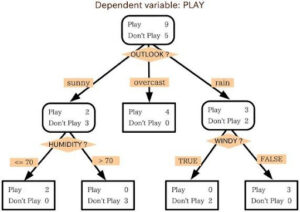

- What is a decision tree and how does it work?

Answer: A decision tree is a supervised learning algorithm that is commonly used for classification and regression problems. It works by recursively partitioning the input space into regions, based on the values of input features, such that each region corresponds to a unique class label or output value. The tree is constructed by selecting the feature that best splits the data based on some impurity measure, such as information gain or Gini index, and then recursively applying the same splitting process to the subsets of data that result from the split.

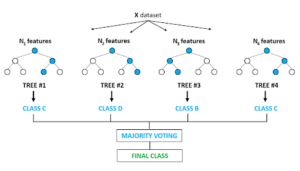

- What is a random forest algorithm and explain the difference with a decision tree?

Answer: A random forest is an ensemble learning method that combines multiple decision trees, typically trained on random subsets of the data and/or random subsets of features. To arrive at the final prediction, the predictions of all the trees in the forest are combined. Random forests are designed to reduce the overfitting and instability issues of single decision trees, while still preserving their interpretability and ability to handle both categorical and numerical data.

- How is the splitting criterion determined in a decision tree?

Answer: The splitting criterion is determined based on the measure of impurity or entropy in the data at a particular node. Some common measures of impurity are Gini impurity and information gain.

- What is pruning in decision trees?

Answer: Pruning is the process of removing branches from a decision tree that do not improve its accuracy on the validation data and used to handle overfitting.

- What is the role of bootstrap aggregating in building a random forest?

Answer: Bootstrap aggregating or bagging is a technique used in random forests to improve the accuracy and stability of the model. It involves creating multiple random samples of the training data with replacement and building a decision tree on each sample. To arrive at the final prediction, the predictions of all the trees in the forest are combined

- How is feature importance determined in a random forest?

Answer: Feature importance in a random forest is determined by measuring the decrease in impurity (e.g., Gini impurity or information gain) caused by each feature. Features that result in the largest decrease in impurity are considered the most important.

- What are entropy and Gini impurity?

Answer: Entropy is a measure of impurity that calculates the amount of disorder or randomness in the data. In other words, it measures how much uncertainty or surprise there is in the data. Entropy is calculated as follows:

Entropy = -∑(p(i) log2 p(i))

where p(i) is the proportion of samples in class i.

Gini impurity, on the other hand, measures the probability of incorrectly classifying a randomly chosen sample from the data set. It is calculated as follows:

Gini impurity = 1 – ∑(p(i)^2)

where p(i) is the proportion of samples in class i.

Both entropy and Gini impurity are used in the decision tree algorithm to determine the best feature to split the data into classes. The feature that results in the lowest impurity (i.e., the highest information gain) is chosen as the split criterion.

- How to do pruning in Decision Tree and what are the types of pruning

Answer: Pruning is a technique used in decision trees to reduce overfitting and improve the generalization performance of the model. It involves removing branches from the tree that do not contribute much to the overall accuracy of the model. Here are the basic steps involved in pruning a decision tree:

- Split the data into training and validation sets: The training set is used to train the decision tree model, and the validation set is used to evaluate its performance.

- Grow a full decision tree: A full decision tree is grown by recursively splitting the data based on the features that provide the highest information gain.

- Evaluate the performance of the decision tree on the validation set: The accuracy of the decision tree is evaluated on the validation set.

- Prune the decision tree: Starting from the bottom of the tree, each node is evaluated to determine whether removing it will improve the accuracy of the model on the validation set. If removing a node improves the accuracy, then the node is removed along with its descendants.

- Evaluate the performance of the pruned decision tree on the validation set: The accuracy of the pruned decision tree is evaluated on the validation set.

- Choose the best model: The decision tree with the highest accuracy on the validation set is chosen as the final model.

Pruning is classified into pre-pruning and post-pruning. Pre-pruning involves stopping the growth of the tree early before it becomes too complex. Post-pruning involves growing a full decision tree and then removing unnecessary branches.

Common methods for post-pruning include reduced error pruning, cost complexity pruning, and minimum description length (MDL) pruning.

- What is SVM?

Answer: SVM is a type of supervised learning algorithm used for classification and regression analysis. It tries to find a hyperplane or a line in higher dimensions that can separate the different classes or groups of data points.

- What is the kernel trick in SVM?

Answer: The kernel trick is a technique used in SVM to transform the original feature space into a higher dimensional space where it may be easier to find a hyperplane that can separate the classes. The kernel function is a mathematical function that calculates the dot product of two feature vectors in the transformed space without actually computing the transformation.

- What are the different types of SVM?

Answer: The two main types of SVM are:

Linear SVM: It uses a linear hyperplane to separate the classes in the feature space.

Non-linear SVM: It uses a non-linear function, such as a polynomial or radial basis function (RBF), to transform the feature space into a higher-dimensional space where the data points are more separable.

- What is overfitting in SVM and how to prevent it?

Answer: Overfitting in SVM occurs when the model is too complex and fits the training data too closely, resulting in poor generalization to new data. To prevent overfitting in SVM, we can use regularization techniques like adding a penalty term to the objective function or using a smaller C value.

- What are the different kernels in SVM and when to use them?

Answer: The kernels in SVM are of different types that we can use example linear kernel, polynomial kernel, radial basis function (RBF), and sigmoid. The linear kernel is used when the data is linearly separable, the polynomial kernel is used when the data is not linearly separable but can be separated by a polynomial function, and the RBF kernel is used when the data is not linearly separable and can be separated by a non-linear function, and the sigmoid kernel is used when the data is not linearly separable and has a sigmoidal shape.

- What is the role of support vectors in SVM?

Answer: Support vectors are the data points closest to the decision boundary of an SVM model. They play a crucial role in defining the decision boundary and maximizing the margin between the two classes.

- What is the difference between hard-margin and soft-margin SVM?

Answer: The hard margin SVM attempts to find a decision boundary that completely separates the two classes. In contrast, the soft margin SVM permits some misclassifications and determines a decision boundary that maximizes the margin while minimizing the misclassification error.

- Explain the difference between linear and non-linear SVM.

Answer: Linear SVM uses a linear decision boundary to separate the two classes, while non-linear SVM uses a non-linear decision boundary to separate the two classes. Non-linear SVM achieves this by transforming the input data into a higher dimensional space where it is more likely to be linearly separable.

- What is the role of the kernel function in SVM?

Answer: The kernel function in SVM is used to transform the input data into a higher dimensional space where it is more likely to be linearly separable. The choice of kernel function depends on the characteristics of the data and the specific problem being addressed.

- What is the meaning of gamma in SVM?

Answer: Gamma in SVM is a hyperparameter that controls the shape of the decision boundary. A smaller gamma value will result in a smoother decision boundary, while a larger gamma value will result in a more complex decision boundary that fits the training data more closely.

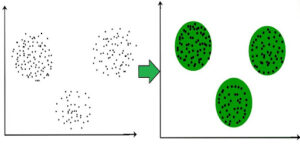

- What is clustering in machine learning?

Answer: Clustering is a type of unsupervised learning where the goal is to group similar data points together based on their characteristics or features. It involves finding patterns or structures in unlabelled data.

- What are some common applications of clustering in machine learning?

Answer:

- Customer segmentation: Clustering can be used to segment customers into groups based on their behaviour, preferences, and demographics. This can help businesses to target specific customer groups with personalized marketing strategies.

- Image segmentation: Clustering can be used to segment an image into regions based on the similarity of the pixels. This can be useful in image processing, computer vision, and object recognition tasks.

- Anomaly detection: Clustering can be used to identify outliers or anomalies in a dataset. This can be useful in fraud detection, network intrusion detection, and other applications where unusual behaviour needs to be detected.

- Recommendation systems: Clustering can be used to group similar items or users in recommendation systems. This can help to make personalized recommendations to users based on their preferences.

- Genomics: Clustering can be used to identify patterns in gene expression data, which can help to understand the underlying biological processes. This can be useful in drug discovery and personalized medicine.

- Social network analysis: Clustering can be used to identify communities or groups in social networks based on the connections between users. This can be useful in marketing, and politics.

- What are the different types of clustering algorithms?

Answer: There are several types of clustering algorithms, including K-means, hierarchical clustering, density-based clustering, and model-based clustering.

- What is K-means clustering?

Answer: K-means is a popular clustering algorithm that partitions data points into K clusters. It works by iteratively assigning data points to the nearest cluster center and updating the center based on the mean of the points in that cluster. This process will perform till data points stop changing the cluster.

- What is hierarchical clustering?

Answer: Hierarchical clustering is a clustering algorithm that creates a hierarchy of clusters by either recursively merging smaller clusters into larger ones (agglomerative) or recursively splitting larger clusters into smaller ones (divisive).

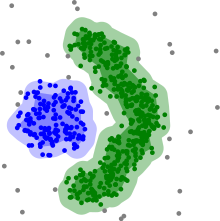

- What is density-based clustering?

Answer: Density-based clustering is a clustering algorithm that identifies clusters based on regions of high density separated by regions of low density. The algorithm works by identifying “core points” that have a minimum number of neighboring points within a specified radius, and then grouping together all points that are reachable from a core point.

- What is the elbow method in clustering?

Answer: The elbow method is a technique used to determine the optimal number of clusters in K-means clustering. It involves plotting the within-cluster sum of squares (WCSS) against the number of clusters and selecting the number of clusters where the rate of decrease in WCSS slows down, resembling an elbow shape in the plot.

- What is the silhouette score in clustering?

Answer: The silhouette score is a metric used to evaluate the quality of clustering results. It measures how similar an object is to its own cluster compared to other clusters, and ranges from -1 to 1, with higher values indicating better clustering.

- What is PCA in machine learning?

Answer: PCA stands for Principal Component Analysis, which is a dimensionality reduction technique used to transform high-dimensional data into a lower-dimensional space while preserving most of the variability in the data.

- How does PCA work?

Answer: The process of PCA involves determining the directions or axes of maximum variance in the dataset, and then projecting the data onto these axes to create a lower-dimensional representation while preserving as much information as possible. The first principal component explains the most variance in the data, and each subsequent component explains as much variance as possible while being orthogonal to the previous components.

- How do you choose the number of principal components to use?

Answer: One common approach is to look at the explained variance ratio for each principal component, and choose the number of components that explain a sufficient amount of the total variance in the data. Another approach is to use cross-validation to evaluate the performance of the machine learning algorithm using different numbers of components.

- What is ensemble learning in machine learning?

Answer: Ensemble learning is a technique in machine learning where multiple models are combined to improve the overall performance and accuracy of the model. Instead of relying on a single model, ensemble learning uses multiple models and combines their predictions to make the final prediction.

- What are some popular ensemble learning techniques?

Answer: Some popular ensemble learning techniques are:

- Bagging: In bagging, multiple instances of the same model are trained on different subsets of the data, and their outputs are averaged to make the final prediction.

- Boosting: In boosting, a series of weak models are trained sequentially, with each model trying to improve upon the errors of the previous model.

- Stacking: In stacking, multiple models with different architectures are trained on the same data, and their outputs are combined using a meta-model to make the final prediction.

- Random Forest: Random Forest is an ensemble learning algorithm that uses decision trees as base models. It trains multiple decision trees on different subsets of the data, and their outputs are averaged to make the final prediction.

- What is deep learning, and how is it different from traditional machine learning?

Answer: Deep learning is a subfield of machine learning that is based on artificial neural networks. Unlike traditional machine learning algorithms, which require feature engineering, deep learning algorithms learn features directly from the raw data. Deep learning algorithms can learn more complex representations of the data than traditional machine learning algorithms, which makes them better suited for tasks like image recognition and natural language processing.

- What are some popular deep learning frameworks?

Answer: Some popular deep learning frameworks include TensorFlow, Keras, PyTorch, and Caffe.

- What is backpropagation, and how is it used in deep learning?

Answer: Backpropagation is an algorithm used to train artificial neural networks in deep learning. It involves calculating the gradient of the loss function with respect to the parameters of the neural network and using this gradient to update the parameters using gradient descent. Backpropagation is used to optimize the weights and biases of the neural network during the training process.

- What is a convolutional neural network, and how is it used in deep learning?

Answer: A Convolutional Neural Network (CNN) is primarily utilized for tasks related to image recognition and belongs to the category of neural networks. CNNs use a process called convolution, which involves sliding a small window (known as a filter or kernel) over the input image and calculating a dot product between the filter and the corresponding pixels in the image. This process generates a feature map, which is then passed through a nonlinear activation function. The resulting output is then fed into another convolutional layer or a fully connected layer for further processing.

For Free, Demo classes Call: 020-71173143

Registration Link: Click Here!

- What is a recurrent neural network, and how is it used in deep learning?

Answer: A recurrent neural network (RNN) is a type of neural network that is used primarily for sequential data processing tasks, such as speech recognition and natural language processing. RNNs use feedback connections to allow information to persist across time steps, which enables them to process sequences of arbitrary length. RNNs can be trained using the backpropagation algorithm, which involves unrolling the network over time and applying backpropagation through time (BPTT) to update the weights and biases.

- What is transfer learning, and how is it used in deep learning?

Answer: Transfer learning is a technique employed in deep learning, wherein a pre-trained model serves as an initial point for a new task. The pre-trained model has already been trained on a large dataset, which means it has learned to recognize many different features. By using a pre-trained model as a starting point, the new model can learn to recognize new features more quickly and with less data. Transfer learning is especially useful when working with small datasets, as it can help prevent overfitting.

- What is a dropout, and how is it used in deep learning?

Answer: Dropout is a technique that is important in deep learning to avoid overfitting We are going to drop out (setting to zero) some fraction of the input units during training time. This forces the network to learn redundant representations of the input, which can improve its generalization performance. Dropout can be thought of as a form of ensemble learning, where multiple models are trained on different subsets of the data and combined at test time.

- What is LSTM?

Answer: LSTM stands for Long Short-Term Memory. It is a type of recurrent neural network (RNN) that is designed to handle the problem of vanishing and exploding gradients that can occur in traditional RNNs. LSTM networks are capable of capturing long-term dependencies in time-series data and are commonly used in applications such as natural language processing and speech recognition.

- How do LSTM networks work?

Answer: LSTM networks work by using a memory cell to store information about previous inputs and outputs. The memory cell has three main components: an input gate, a forget gate, and an output gate. The input gate determines which values to update in the memory cell based on the current input, while the forget gate determines which values to keep in the memory cell based on previous inputs. The output gate then uses the updated memory cell to generate the output for the current timestep.

- What are some common SQL commands used in data science?

Answer: Common SQL commands used in data science include SELECT, FROM, WHERE, JOIN, GROUP BY, HAVING, and ORDER BY. These commands are used to retrieve and manipulate data from relational databases.

- How do you join tables in SQL?

Answer: To join tables in SQL, you use the JOIN keyword followed by the name of the table you want to join. There are several types of joins, including INNER JOIN, LEFT JOIN, RIGHT JOIN, and FULL OUTER JOIN, each with its own syntax and use cases.

- How do you handle missing data in SQL?

Answer: : To handle missing data in SQL, you can use the IS NULL and IS NOT NULL operators to filter out rows with missing values, or you can use the COALESCE or IFNULL functions to replace missing values with a default value.

- What is big data?

Answer: Big data refers to extremely large and complex data sets that cannot be easily processed using traditional data processing methods.

- Can you list the three V’s that are commonly associated with Big Data??

Answer: The three Vs of big data are volume, velocity, and variety. Volume refers to the large amount of data, velocity refers to the speed at which data is generated and processed, and variety refers to the different types of data, such as structured, unstructured, and semi-structured.

- What is Hadoop?

Answer: Hadoop is a framework that is open-source and is utilized for storing and processing massive datasets It provides a distributed file system and allows for parallel processing of large data sets using MapReduce.

- What is Spark?

Answer: Spark is an open-source data processing engine that is used for large-scale data processing. It provides faster processing than Hadoop by using in-memory computing and allows for data processing using SQL, streaming, and machine learning.

- What are NoSQL databases?

Answer: NoSQL databases are non-relational databases that are designed to handle large and unstructured data sets. They are often used in big data applications as they can handle large volumes of data and provide scalability.

- What is cloud computing?

Answer: Cloud computing refers to the delivery of computing services, including storage, processing, and software, over the Internet. It provides scalability, flexibility, and cost savings for big data applications.

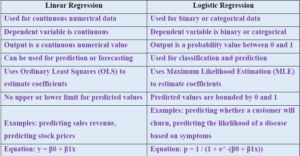

- Difference between linear regression and logistic regression?

Answer:

- What is the difference between precision and recall, and when are they important to consider in a classification problem? Can you provide some examples of situations where a false positive or a false negative could be costly, and explain why precision or recall, respectively, is important in those cases?

Answer: Precision and recall are both important metrics in evaluating the performance of classification models.

Precision measures the proportion of true positives (i.e., the cases that were correctly classified as positive) among all positive predictions made by the model. In other words, it measures how accurate the model is when it predicts that a certain case belongs to the positive class.

Give importance on precision when the cost of a false positive is high.

For example:

In medical diagnosis, a false positive (predicting that a patient has a disease when they actually don’t) could lead to unnecessary treatments and procedures that are costly and potentially harmful.

In credit fraud detection, a false positive (flagging a legitimate transaction as fraudulent) could result in blocking a legitimate purchase, which can negatively impact the customer experience and cause inconvenience.

Recall means total true positives predicated cases among all actual positive cases. In other words, it measures how well the model identifies all the positive cases.

Recall is important when the cost of a false negative is high.

For example:

In medical diagnosis, a false negative (failing to diagnose a disease in a patient who actually has it) could delay treatment and lead to further complications or even death.

In spam email detection, a false negative (failing to flag a spam email as spam) could result in the user receiving unwanted emails, which can be a nuisance and potentially harmful if the email contains malicious content.

In summary, precision is important when the cost of a false positive is high, while recall is important when the cost of a false negative is high. In practice, the choice of which metric to prioritize depends on the specific context and goals of the classification problem.

- What are some common challenges of working with big data?

Answer: Some common challenges of working with big data include data quality issues, processing and storage limitations, privacy and security concerns, and the need for specialized skills and tools.

- In a classification problem with imbalanced classes, where the majority class makes up 90% of the data and the minority class makes up 10%, a machine learning model achieves an accuracy score of 90%. Is this model good enough to deploy in a real-world scenario? Why or why not??

Answer: While an accuracy score of 90% may seem high at first glance, it can be misleading in cases of imbalanced classes. In this scenario, a model that simply classifies all instances as the majority class (i.e., without any actual learning taking place) would achieve an accuracy score of 90%, but it would be useless for practical purposes.

In such cases, it is important to look beyond accuracy and consider other metrics such as precision, recall, and F1-score, which take into account the true positive rate and false positive rate for each class. For example, if the minority class is the one that is of greater interest, achieving high recall for that class may be more important than overall accuracy.

Furthermore, it may be necessary to use techniques such as oversampling or undersampling of the minority class, or the use of more advanced algorithms such as ensemble methods, to improve the model’s performance on the minority class

- Suppose you are working on an anomaly detection problem where the goal is to detect fraudulent transactions in a credit card dataset. The dataset contains 100,000 transactions, of which only 1,000 (1%) are labelled as fraud. You decide to use an unsupervised learning approach with a one-class support vector machine (SVM) to detect anomalies in the dataset. After training the model, you obtain a precision of 90% and a recall of 70% on the fraud class. Is this model likely to perform well on new, unseen data? Why or why not?

Answer: In this scenario, the one-class SVM model achieved a relatively high precision of 90%, meaning that out of all transactions that the model classified as fraud, 90% were actually fraudulent. However, the recall of 70% indicates that the model missed 30% of the actual fraud transactions, which is a relatively high false negative rate.

Given the highly imbalanced nature of the dataset, achieving a high precision is important to minimize false positives, which would be flagged as potential fraud and require further investigation. However, missing actual fraud transactions is still a significant issue, and a high precision score does not necessarily imply good performance on new, unseen data.

Therefore, it is difficult to determine whether the one-class SVM model is likely to perform well on new, unseen data without further evaluation. It may be necessary to explore other evaluation metrics, such as the F1 score or the receiver operating characteristic (ROC) curve, and consider the specific context and cost associated with false positives and false negatives.

- What are some examples of when to remove or keep outliers in machine learning? Why is it important to investigate outliers carefully before deciding whether to remove them or not?

Answer: Here are some examples of when to remove or keep outliers in machine learning:

Regression: In linear regression, outliers can have a significant impact on the slope of the regression line and the accuracy of the model. In this case, it may be appropriate to investigate the outliers and consider removing them if they are found to be spurious or erroneous.

Technical errors: In some cases, outliers may be the result of technical errors, such as data entry mistakes or system glitches. In this case, it may be appropriate to remove the outliers or correct the errors to ensure the accuracy of the model.

Financial data: Suppose a company is analysing the stock price of a particular stock over a period of time. The dataset contains a few extreme values that are much higher or lower than the rest of the data. In this case, it may be appropriate to keep the outliers and investigate their potential causes (e.g., unexpected market events or corporate announcements) to gain insights into the underlying trends and patterns in the data.

Fraudulent transactions: In credit fraud detection, transactions that are significantly different from the typical transaction patterns of a customer or a group of customers may be flagged as potential outliers. In this case, it may be appropriate to keep the outliers and investigate them further to determine whether they represent fraudulent activity.

Legitimate but unusual transactions: Some legitimate transactions may be flagged as outliers due to their unusual nature, such as large purchases or overseas transactions. In this case, it may be appropriate to keep the outliers and investigate them further to ensure that they are legitimate.

- What is NLP?

Answer: NLP is a subfield of computer science and artificial intelligence that focuses on the interaction between computers and humans in natural language. Due to NLP machines can understand, interpret, and generate human language.

- What are some popular NLP libraries in Python?

Answer: Python has several NLP libraries that are widely used, including NLTK (Natural Language Toolkit), spaCy, Gensim, TextBlob, and sci-kit-learn. These libraries provide tools and techniques for performing various NLP tasks such as text preprocessing, feature extraction, and modeling.

- What are some common NLP tasks?

Answer: Some common NLP tasks include text classification, sentiment analysis, named entity recognition, topic modeling, language translation, and text summarization. These tasks are used in various applications such as chatbots, customer service, social media analysis, and search engines.

- What is tokenization in NLP?

Answer: Tokenization involves segmenting a text document into smaller, meaningful units known as tokens. In NLP, tokens are usually words or sentences. Tokenization is an important step in text processing because it allows us to analyze and manipulate text at a more granular level.

- What is stems in NLP?

Answer: Stemming is the process of reducing a word to its base or root form. Stemming is useful in NLP because it allows us to normalize variations of the same word. For example, the words “run,” “running,” and “runner” can be stemmed to “run.”

- What is lemmatization in NLP?

Answer: Lemmatization is similar to stemming, but it involves reducing a word to its base or dictionary form, known as the lemma. Lemmatization is more accurate than stemming because it considers the context and part of speech of the word. For example, the word “went” can be lemmatized to “go”

- What is named entity recognition (NER) in NLP?

Answer: Named entity recognition (NER) is way of recognizing and categorizing named entities in unstructured text data. Named entities are typically proper nouns, such as people, organizations, locations, and dates, that convey specific meanings within the context of the text. NER is a crucial task in NLP because it helps us to understand the relationships between entities in a text.

- What is topic modeling in NLP?

Answer: Topic modeling is the process of identifying and extracting topics or themes from a large corpus of text data. Topic modeling is useful in NLP because it helps us to understand the main themes and ideas in a text.

- Suppose you have 80 balls, consisting of 40 red balls and 40 blue balls, and two buckets. You can distribute the balls between the buckets in any way you like, with the aim of maximizing the probability of selecting a red ball if you choose one ball at random from one of the buckets. How should you distribute the balls to achieve this goal?

Answer: To maximize the probability of selecting a red ball if we choose one ball at random from one of the buckets, we should put one red ball in one bucket and the remaining balls in the other bucket. This will result in a probability of 74.4% of selecting a red ball if we choose one ball at random from one of the buckets. This is higher than the probability of 50% if we put all the red balls in one bucket and all the blue balls in the other bucket.

The probability of selecting a red ball from the bucket with only one red ball is 1/2 because there are a total of 2 balls in that bucket. The probability of selecting a red ball from the other bucket is 39/79 because there are 39 red balls and 40 blue balls in that bucket.

The overall probability of selecting a red ball is the weighted average of these two probabilities, which is: (1/2) * 1 + (1/2) * (39/79) = 0.7437 or approximately 74.4%

- Suppose We have an hourly temperature of the city that you live in. We have gathered data over several years would random sampling be a good strategy to use if we want to work with a sample of the dataset? if yes why? if not why?

Answer: No, random sampling would not be an appropriate strategy to use if you want to work with a sample of the dataset of hourly temperature data over several years for the city you live in. The reason for this is that the hourly temperature data is likely to be correlated over time, meaning that each data point is influenced by the previous ones. Random sampling assumes that each data point is independent of the others, which is not the case in this scenario. Instead, you could consider using time-based sampling, where you take a sample at regular intervals throughout the time period you are interested in. This would allow you to capture the trends and patterns in the data over time, which would not be possible with random sampling.

Alternatively, you could consider using stratified sampling, where you divide the data into groups based on relevant characteristics (e.g., seasons, time of day, etc.) and then take a sample from each group. This would allow you to capture the variability in the data across different groups and ensure that your sample is representative of the entire dataset.

- What are the characteristics of a normal distribution with respect to its standard deviation?